For the Provocations series, in conjunction with UCI’s “The Future of the Future: The Ethics and Implications of AI” conference.

In their book The End of Capitalism (As We Knew It), J.K. Gibson-Graham, a two-person writing team, examine a conundrum: after innumerable examinations of capitalism’s inherent contradictions, and despite decades of projects devoted specifically to accelerating its demise, capitalism seems as vibrant as ever. Gibson-Graham ask, “In the face of these efforts, how has capitalism maintained such a strong grip on political economy?” The answer they offer is oblique but striking: perhaps it hasn’t.

More precisely, they suggest that the conventional wisdom that economic life is dominated by capitalist relations is not, in fact, true. They point to the wide range of forms of economic engagement that fall outside the limits of traditional political economy — domestic activity, relations of care, mutual support, self-sustenance, and more — to argue that capitalism is only one amongst a range of concurrent forms of economic life — and perhaps not even the most common. The mistake, they argue, has been to acquiesce to the way that analysts of political economy have excluded these other forms of economic relations as objects of study. They argue for a new analysis of political economy that rejects the conventional wisdom and embraces already existing alternatives.

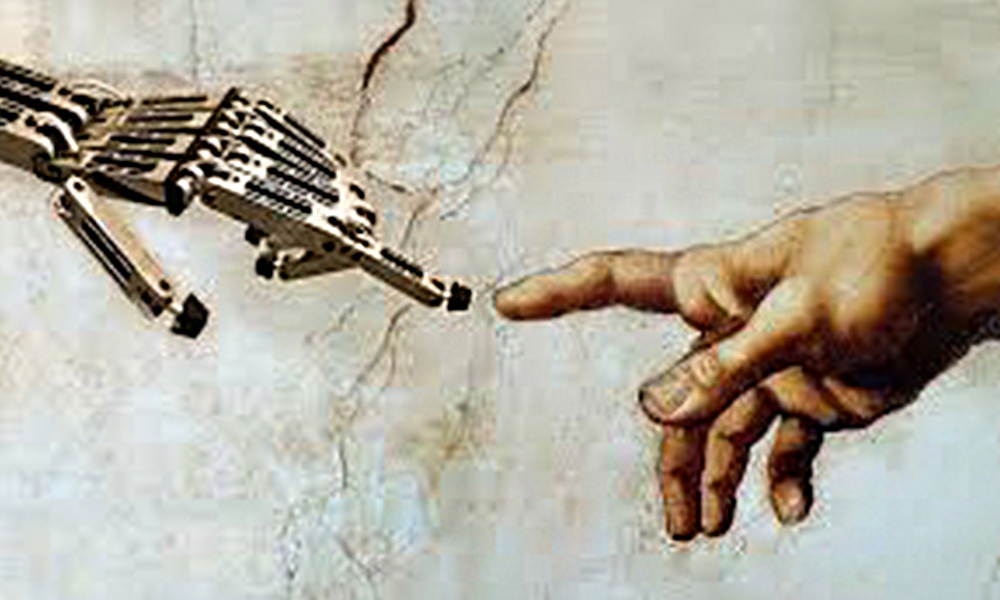

Taking our cue from Gibson-Graham, we may want to think similarly about artificial intelligence. AI boosters and critics alike subscribe to a narrative of AI as an astounding, if nascent, technological success, one that leads inexorably towards new patterns of data exploitation, large-scale inference, and predictive ability. AI futures seem just around the corner, for good or ill. But if Gibson-Graham argue that we cede too easily to capitalism its all-encompassing reach, I suggest that we cede too easily to AI the relentless perfectibility of its algorithms. Instead of thinking of AI in terms of technological grandeur, suppose we think of it as something much messier — as something that limps along, something that barely functions, something that must be propped up in order to operate effectively.

We can catch a glimpse of this by recognizing the degree to which AI’s success depends critically on our own interpretive work. AI thrives in cases where there is no correct answer to be identified or determined. When Google tells us that a particular web page is absolutely the best match for our query, we are in no position to disagree or to scrutinize the claim. When an algorithm suggests that one person might default on a loan but another will likely not, we have no basis on which to check. We are not able to ask whether these decisions are right or wrong, but only whether they are plausible. When AI misidentifies a tiger as a cat, we give it the benefit of the doubt — “close enough.” In other words, AI’s technical success is sustained primarily by our own acquiescence.

It’s remarkable, in fact, that our fears about the widespread deployment of facial recognition technology are not more regularly undermined by repeated encounters with the everyday failures of that technology on our phones. Nor do we seem to reassess our concerns about auditory surveillance in the face of the repeated challenges of getting a voice response system to recognize a credit card number. The prospects for autonomous vehicles are somehow immune to the observation that Tesla drivers still can’t use Autopilot in the rain.

Indeed, AI is not even as “artificial” as it appears. In their recent eponymous book, Mary Gray and Siddharth Suri describe the prevalence of “ghost work” — the human work behind the scenes that makes AI work. Many AI applications are actually sustained by people behind the scenes doing the work of, say, image recognition. Ever needed to prove to a website that you are not a robot by identifying which images contain traffic lights or crosswalks? Congratulations! You’re an image-recognition AI agent!

AI’s achievements, then, are neither as definitive nor as complete as they might seem, and its futures may be much more in question than we imagine. If we adopt a more evaluative stance, we can see AI in a new light. What might we achieve by taking this perspective? What purchase might we gain on the questions and problems of contemporary AI?

First, we might recognize that the seemingly relentless march of AI technology is not inevitable. AI technologies and capacities are much more contingent achievements than they might seem. Further, if AI futures are not inevitable, then they may be controllable, chooseable, or preventable.

Second, and consequently, we might recognize the importance of human agency in the development of AI, reframing a conversation about regulation, control, and management. When AI is seen not as a technological agenda but as a human one, arguments about the problems of regulating innovation start to lose their force. We are all participants in sustaining AI and might thus all have a role to play in reimagining it.

Finally, we might regain a healthy skepticism, especially towards those apparent achievements of AI that are, prima facie, ludicrous. Take, for example, the popular response to the proposal that deep learning can analyze photographs to identify sexual orientation. Our first response to this should not be to worry how much power could be abused. Our first response, rather, should be that this is clearly and obviously nonsense and that something smells distinctly fishy. That it is not immediately obvious to us that something else is going on — which turned out in this case to be about the visual conventions of different online dating sites — signals a worrying credulity. Getting rid of our wish that alleged AI achievements be true is surely the first step in reconfiguring our relationship with these technologies.

This is, however, by no means an argument for complacency. The problems of data privacy and data piracy, and of algorithmic bias and algorithmic injustice, are very real. Indeed, it is precisely because AI fails to live up to the claims made for it that critical attention is needed. The challenges of AI are not the challenges of artificial personhood. They are the challenges of deciding the restrictions we should impose on how a limited and brittle technology might be used.

Paul Dourish, Chancellor’s Professor of Informatics in the Donald Bren School of Information and Computer Sciences at UCI, is best known for his work and research at the intersection of computer science and social science. He is the author, most recently, of The Stuff of Bits: An Essay on the Materialities of Information.