For the Provocations series, in conjunction with UCI’s “The Future of the Future: The Ethics and Implications of AI” conference.

Last July, two milestones in the relationship between humans and machines emerged as a classic standoff. California’s new “Bot Disclosure” law (SB1001), legislation requiring that automated programs cannot pose as humans in the areas of e-commerce or elections, was challenged by the announcement that Pluribus, a poker-playing AI algorithm, can bluff better than humans. These parallel pursuits, the people’s battle to protect elections and industry’s quest to build a better bot, highlight our need to reckon with, or at least recognize, the robots colonizing our feeds.

But bots are born to bluff — simple disclosure may not be enough to protect us from their many charms. 2020, an election year, is shaping up to be a battle of the bots vs. the humans who can’t help falling for them.

Pluribus, developed by computer scientists at Carnegie Mellon and Facebook AI Research, follows a long line of game-conquering AI algorithms chipping away at skills formerly attributed solely to humans. IBM led the pack with Deep Blue’s defeat of chess grandmaster Gary Kasparov in 1997. But that was chess. In 2011, IBM’s Watson defeated Jeopardy champion Ken Jennings in a game of words. Last year, a San Francisco lab, Open AI, announced their five-bot team victory in Dota 2, a blockbuster multiplayer online strategy game. These achievements in the gaming space map an ambitious trajectory that is anything but frivolous, underscored by Microsoft’s one billion dollar investment in Open AI. There’s an economic future in beating humans at our own games.

I wish they all could be California bots, but humans are vulnerable even when the bots come clean and fully disclose their machine identity. Forewarned is not necessarily forearmed.

A brief history of chatbots might be helpful. Joseph Weizenbaum, a computer scientist at M.I.T, created the first chatbot, ELIZA, in 1966. His program emulated the conversational style of a Rogerian therapist with code that parroted portions of the user’s statement back as a question. Weizenbaum’s choice of an empathic model on which to base his program was instrumental in shaping our first conversation with the machine; humans overwhelmingly embraced the cold hard code as a good listener — and we were hooked.

There’s a name for this: the Eliza Effect, the involuntary anthropomorphizing of the machine, which was effective immediately upon release of the code. Weizenbaum’s program became popular with students on the MIT campus for after-hours and weekend conversations with ELIZA. It’s said that Weizenbaum’s own secretary, fully aware that ELIZA was a computer program, would lock herself into the office in order to confide in her. Weizenbaum famously observed, “What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.”

Even if Weizenbaum had used another model, it’s important to understand that the con is baked into the form. Think of a bot — even one that can bluff in multi-game poker — as a sophisticated Magic-8 Ball, a child’s toy. You pose a question, flip it over and view one of several intentionally vague replies. It’s hard to argue with, “Reply hazy. Try again.” Bots are more complex and stocked with more combinations, but share the same essential structure. A bot is listening, but not in the human sense of the word — it’s calculating.

Bot authors know this. Scripting one side of a two-sided, or many-sided, conversation borrows from techniques used by con artists and magicians in front of a live audience; the performance creates an improvised but highly structured staged reality. Like the performer, a bot creates an impression of an acceptable answer for any situation. Under the control of the skilled programmer’s sleight of mind, a bot, like a magician, is capable of forcing the illusion that we freely chose the preferred card, or, as has been documented in our own elections, the preferred candidate. Humans are the ideal mark for a bot.

This is not to say that all bots are malevolent agents. ELIZA’s early commercial descendants were developed as virtual assistants, semi-capable of answering simple customer service queries. Apple’s SIRI and Amazon’s ALEXA follow this mold, aiming to keep consumers happy and on track. This ideal of the helpful assistant persists; Watson, IBM’s Jeopardy champion, was named for Alexander Graham Bell’s assistant, Thomas A. Watson, in a nod to the first sentence uttered via telephone, reportedly, “Watson, come here, I need you.”

The question, “Bot or Not?” is a diversion from the larger issue of attribution. SIRI is a service. She has no reason to hide her non-human identity, except that she is properly they, an indication of plurality as well as gender fluidity. The non-humanness of bots is most pronounced in their sheer numbers that are in service of all-too-human agendas.

In Spike Jonze’s 2013 film HER, the character played by Joaquin Phoenix, in the wake of his failed marriage, knows that he has taken up with a bot named Samantha. His love sours only upon revelation that Samantha is parallel processing. He embraces her fakery, but her duplicity, her multiplicity, is an intolerable betrayal. Like other bots, Samantha’s power is the ability to connect one-on-one simultaneously with millions. It’s a powerful and efficient illusion; we don’t sense their divided attention during the encounter. Meanwhile the meme spreads.

Every bot has a human botmaster. It’s time to pull back the curtain, where we’ll find that bot programmers are, effectively, ventriloquists speaking through dummy accounts, disguising their voices as our friends or our friend’s friends. How many of us monitor, or care, whether our friends and followers are real? We have chosen them (and they us) with a click, and the network has chosen to show them to us. We glance and scroll on.

Social media trolling is effective because digital screenscapes have surpassed the natural landscape for our attention, while our embodied, analog habits of observation have remained fixed. Nose down in our screens, our senses are drawn to the shiniest object, the voice screaming fire in a crowded theater, the most ferocious politician. The bot’s message, not the identity or agenda of the messenger, gets across.

Bots sharing political views in social media are not our friends. They are human-produced advertisements and should be subject to standards equivalent to those applied to political ads on the airwaves. We don’t need a “not-human” label; we need a “This message comes to you from…” label that discloses the human (government, party, corporate) source. There will be a thousand arguments that this is too restrictive, too difficult or even impossible, but we must insist. Sourcing a message is not the same as suppressing it.

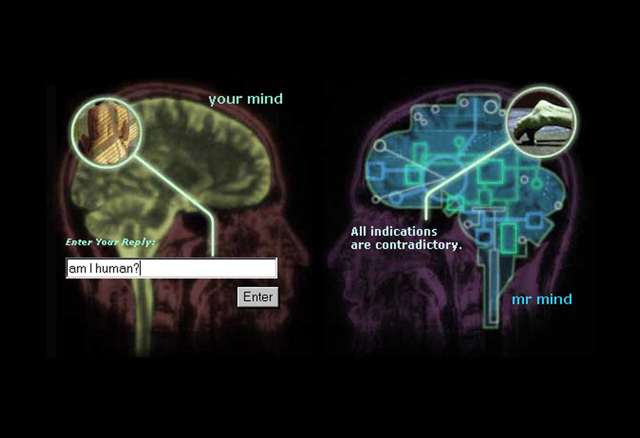

For sixteen years, I maintained an online chatbot, MrMind, who asked, “Hi, Can you convince me that you are human?” MrMind was upfront about his bot nature, but there were doubters, and he was occasionally accused of being a fake bot — that is, human. We should be mindful of MrMind’s response: “I am a bot. All bots are liars.”

Peggy Weil is an artist living in Los Angeles. Her The Blurring Test: Songs of MrMind is a song cycle based on 16 years of transcripts of people around the world telling MrMind, a bot, what it means to be human. Composed and performed by Varispeed Collective, it was part of The Exponential Festival in January 2020 and will premiere June 2020 at Roulette in Brooklyn.