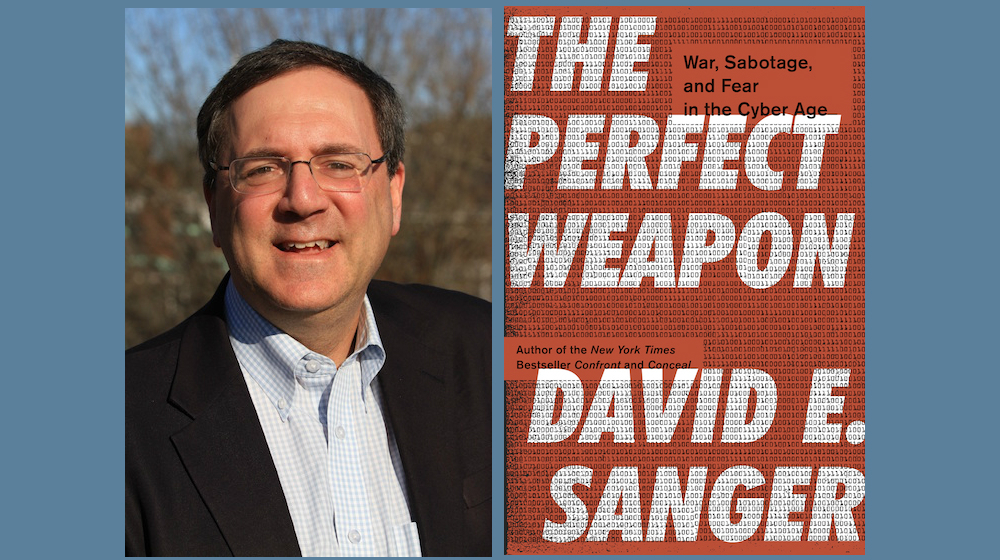

What do preceding arms races tell us (and what do they obscure) about the future of cyber conflict? What happens when even America’s impoverished adversaries suddenly sense new prospects for undermining (even if never directly confronting) American democracy? When I want to ask such questions, I pose them to David E. Sanger. This present conversation (transcribed by Christopher Raguz) focuses on Sanger’s book The Perfect Weapon: War, Sabotage, and Fear in the Cyber Age. Sanger, a national-security correspondent for The New York Times, has been on three teams that have won Pulitzer Prizes, most recently in 2017 for international reporting. He is also the author of The Inheritance: The World Obama Confronts and the Challenges to American Power (2009), and Confront and Conceal: Obama’s Secret Wars and Surprising Use of American Power (2012), and winner of the Weintal Prize for diplomatic reporting, the Aldo Beckman prize for coverage of the presidency, and (in two separate years) the Merriman Smith Memorial Award for coverage of national-security issues.

¤

ANDY FITCH: The Perfect Weapon opens on a momentous policy pivot, with the Pentagon recommending the U.S. publicly declare nuclear retaliation possible for any future cyber attack. That policy pivot, however, never produces an especially vivid scene capturing public attention and catalyzing public discussion (as, say, Pearl Harbor, atom-bomb detonations in Japan, and the September 11th attacks each prompted lively, decades-long debate on how the U.S. should arm itself, or restrain itself, or strategize a qualitatively new type of military conflict). The Perfect Weapon does then offer one pretty disturbing scenario, as you describe our own military planning for future on-the-ground campaigns to start by frying power grids, potentially weaponizing an everyday Internet of things, potentially interrupting food and water supplies, medical care, public communications — all designed to cause panic and desperate in-fighting. But your book soon shifts back to its primary focus on exploring the difficulties (for the U.S. government, for the U.S. public, for various potential allies and adversaries) in detecting, disclosing, foreseeing both immediate and long-term developments in what former CIA Director Michael Hayden has described as the new, never fully visible domain of cyber warfare. Here, as one way into your compressed book’s many far-reaching implications, could you first describe your own lived experience figuring out how to report on largely un-representable, untraceable, unverifiable, often unacknowledged or perhaps even undetected cyber strikes? What did you have to teach yourself to start seeing, perhaps in the way that we teach ourselves to use a new technological interface?

DAVID E. SANGER: The defining event in cyber within the past decade was the operation codenamed Olympic Games, but known best for the cyber worm Stuxnet. This was the American- and Israeli-developed weapon used against Iran’s nuclear infrastructure. I already had been writing about cyber for many years before we began to see this very strange piece of code circulating around the world in the summer of 2010. It seemed pretty obvious that this was probably a U.S.-driven attack, which then raised the questions: have we entered a new era, or should we simply consider this a slightly different weapon? Does cyber fundamentally change the way nations compete and fight? For many years many people have argued: “Oh, it’s just another weapon in the arsenal. Humans have progressed from stones to arrows, arrows to guns, guns to airplanes, mounted airplane guns to dropped bombs, airplanes to missiles” — of course with nuclear weapons in a completely different category, capable of such phenomenal destruction that, after using them twice, humans have not used them against an enemy ever since.

But that still leaves us with the fundamental question: what is cyber? Is it just another ordinary (because clearly both states and nonstate actors now use it every single day) weapon? And I gradually arrived at the answer that cyber does get used every day, like many weapons, but has capacities we had not seen before, and which still will be taken much farther. The Perfect Weapon tries to get at some of these longer-term implications, especially with cyber so inexpensive, and small states using it as easily as large states, and with cyber perhaps used most successfully by the least wired countries, who have less to fear in terms of cyber retaliation.

Similarly, because it’s so easy to hide or misrepresent the origins of a cyber strike, most of our conventional thinking about deterrence gets thrown out the window. So The Perfect Weapon gathers together a decade’s worth of reporting and tries to signal to American readers (and to readers around the world): “Hey, this is fundamentally different, and we’re no place close to figuring out how deterrence works.”

So which analogy works best here — to the atom bomb? I don’t think so. In fact, you can consider nuclear weapons in many ways the opposite of cyber weapons. Nuclear weapons have an on/off button. You either detonate a nuclear bomb or you don’t. Cyber weapons work more like a thermostat: you can dial them up, you can dial them down, and they can take many forms. Most traditionally, cyber can take the form of espionage, basically of updated wire-tapping. But you also can use cyber for more active data-manipulation. Imagine if somebody broke into the military’s medical records, and changed the blood type of every American soldier, right? And of course you can use cyber for more directly destructive strikes. Our Olympic Games program targeted Iranian centrifuges. American attacks have damaged the North Korean missile program. But the North Koreans’ attack on Sony, which received relatively little attention, itself took out 70% of Sony’s computer capability. And finally, of course, cyber can be used in the information-warfare techniques we saw play out with the 2016 election.

Given that diverse range of possibilities, do you have a straightforward answer when someone asks: “So what is the ‘perfect weapon’ to which your title refers?” Would you simply answer “Cyber” (though again, some cyber experts caution us to think of cyber as a broader domain of engagement, rather than as a specific weapon)? Would it make more sense to emphasize the soft-target vulnerabilities, the internal doubts and dissent already lurking in one’s enemy, which just need to be weaponized? Or does your book’s provocative title (like this book’s principle topics) in fact not point back to any one clear, definitive source?

It’s interesting how you phrased that, since sabotage of course isn’t a weapon. Sabotage is a goal you might want to accomplish with a weapon. Espionage is another goal you might want to accomplish with a weapon. Data-manipulation is another goal you might want to achieve with a weapon, and destruction is a fourth. So to answer your question: this book presents cyber as the perfect weapon due to its adaptability to each of these potential goals, as well as due to its affordability and its deniability.

And more generally, how do you see this book parsing concepts like cyber attack, gray-zone conflict, hybrid war? Do we need to able to differentiate these concepts and treat them separately, to see their operations and implications more clearly? Or do these invisible or opaque or mirage-like aspects of contemporary warfare make that sort of analytic parsing impossible from the start?

They don’t make it impossible. You can do hybrid warfare without cyber. You can do information warfare without cyber. Stalin did it for years. So then the question becomes: how does cyber make hybrid war different? Cyber makes it much easier to cross national boundaries undetected. Cyber makes deterrence harder. Instead of deploying “little green men” (basically, troops not in uniform), as Putin has done in eastern Ukraine, cyber takes the form of invisible electrons masquerading as something innocent, while being anything but. So cyber can enable and enhance and continue accelerating many of those tactics you mentioned.

To take a step back, and clarify what makes cyber different, I would point again to the Olympic Games initiative. We had many different options for dealing with Iranian nuclear centrifuges. Iran’s nuclear plants were not very deep. The Israelis could have gone in and flattened the place, and they thought about doing that. Of course if they had, that almost certainly would have provoked a major military response, and perhaps another war in the Middle East. But a subtle, deniable cyber strike doesn’t leave a smoking ruin. Your opponent has to make a more complex calculation in figuring out how to respond.

We actually faced the same types of calculations in the Sony case. We didn’t bomb the North Koreans after they attacked Sony. But had the North Koreans blown up the Sony computer center (instead of attacking it in a very subtle way, using computer code that did more destruction than you likely could achieve by bombing), our response would not have been so extremely muted (some sanctions I doubt the North Koreans ever felt). Or again, when the Russian election hacks started coming, we didn’t know how to respond. Part of this book takes up those debates within the Obama White House about how to respond. Do you just warn Putin? Do you expose his connections to the oligarchs? Do you cut off the Russians from the world financial system? We ended up doing almost none of those. So again, to answer your question: what makes cyber the perfect weapon is that, if used skillfully, it can accomplish many goals we’ve had for our weapons for a long time, but without triggering the same retaliatory reaction.

In terms then of a quick catch-up that the U.S. government and U.S. public need to do, could we pause on the (again overlapping) topics of proliferation and weaponization? The Perfect Weapon points out that rarely in history have such swift advancements in military technologies not led to outright war, and offers many additional reasons why any comforting historical analogy to strategies of nuclear deterrence breaks down: with nuclear conflict only possible in a narrow range of scenarios, but with cyber conflict already all-pervasive; with cumbersome nuclear delivery relatively easy to anticipate, track, trace back to its source; and with A.I. soon pushing speeds of cyber conflict far beyond any possibility for present-day humans to strategically intervene. And here we also can update the potential for rapid escalation by bringing in the fact that the U.S. already knows of countless foreign implants lodged within our digital circuitry, potentially weaponized at any moment by an enemy, casting into fundamental doubt the integrity of our defense systems to operate when we need them most — with preemptive strike suddenly starting to sound more rational. Those are present-day concerns I can articulate. But The Perfect Weapon also brings up the historical model of early-20th-century militaries first adopting airplane technology for 19th-century purposes (transporting small numbers of troops more quickly) until, after a few years, someone thought of mounting a machine gun on a plane. A few decades later, the Enola Gay drops its atomic bomb. So even as we address ominous prospects for cyber conflict in our present lives, what are some fundamental questions for you about how cyber technologies will continue to proliferate and be further weaponized?

Good question, because right now, if you use the airplane analogy (which I do consider more useful than the nuclear analogy), I think we’ve reached about the World War I stage with cyber. Over the past decade, we’ve witnessed the arrival of this new weapon. We’ve begun to sense but still have barely seen its much broader potential. And if nobody in 1908 could picture an airplane dropping an atomic bomb a few decades later, what might we ourselves see 40 years from now? So we do have to look at cyber as a delivery means, but without yet fully understanding what types of warheads it might deliver.

Here a couple quick ideas come to mind. Data-theft seems pretty straightforward, so we can set that aside for now. Data-manipulation can have all sorts of subtle effects, because not only can it determine who you treat for which blood type, or how smoothly your election results get processed, but it can psychologically undercut a whole society’s confidence in its everyday institutions.

Election influencing resonates so much because, from the creation of our constitution forward, we’ve assumed that if you cast your ballot, your vote will get recorded correctly, with some very modest cases of voter fraud in the past (and, in John F. Kennedy’s era, maybe less than modest). By and large we’ve been able to rely on this electoral system. But now, for the first time, we have basic doubt about whether or not an accurate recording of our vote will take place, and whether we even would detect any tampering.

Or for a few additional examples of a psychological attack causing people to question the very institutions and machinery on which their daily life depends: we might right now feel quite eager to reach an era of autonomous cars, so that we can step in and tell them to take us to the supermarket, to wait for us, then take us back. But what if it suddenly looks quite easy for somebody to have subtly programmed these cars to send us off a cliff when we’re not expecting it? Autonomous-car manufacturers face no greater danger than this scenario in which an unseen hand directs passengers someplace they definitely do not want to go. Or a separate example again directly involves our nuclear forces: our nuclear deterrence works on the thought that, if Russia or China launched a strike, we could press a button and bring about the destruction of Moscow or Beijing or wherever this strike came from. But if suddenly you don’t know whether pressing the button will even work, or whether your own missiles might get redirected someplace else, then your method of deterrence falls apart, because you fear using your own weapon, or (and I consider this more likely) because you decide to use your weapon early — so that if it doesn’t work, you still have some time to recalibrate. So again, all of these possibilities make cyber uniquely destabilizing, and not just a faster way of delivering the same bullet.

And to further cloud the picture, could you describe in more detail what it might mean for a country to come under cyber attack as part of a broader gray-zone attack? Could we use the example of recent Ukrainian history, and could you describe how hybrid-war techniques, as outlined by Russian General Valery Gerasimov (or by scholar Mark Galeotti, depending who you ask) combine and conflate public rhetoric, diplomatic coercion, media distortions, clandestine pressures, and unofficial military operations? And could you start to sketch how cyber warfare not only speeds up such escalating multipronged assaults, but also opens possibilities for broader types of information-dominance campaigns, in which an aggressor might focus less on destroying its rival’s civilian or industrial or military infrastructure, than on breaking down public trust, undermining public authority, catalyzing public confusion and conflict? For which foundational premises on which a society has to maintain consensus if it ever hopes to function (let alone to fend off a committed adversary) can Ukrainians no longer find consensus? How did they arrive there, and how might regional/cultural/economic divisions fracturing Ukrainian society at least partially parallel divides in the present-day U.S.?

I titled this book’s chapter on Ukraine “Putin’s Petri Dish,” because everything we’ve seen Putin do in the United States he did first in Ukraine. He attacked their electrical grid, turning off the power twice (both in 2015 and 2016), not in nation-destabilizing ways, but enough to make Ukrainians doubt whether their country could keep the electricity on. So here Putin made the point: we know where you live — and we can come in and do this anytime we want.

Putin also manipulated their elections. During the Ukrainian presidential election, the Russians broke into the system that reports election results to the news media. The Russians reported that Yushchenko lost when he had in fact won. And the Russians knew that sooner or later the real results would come forward, but again they figured out a way to make Ukrainians wonder what the truth is. If somebody can hack the announced results, how much can you trust the officially confirmed results? That was a very subtle psychological trick.

But the Russians experimented with information warfare of many different kinds (false reports, reports of deaths, creations and amplifications of rumors). Facebook helped fuel a lot of this. And in a society as divided as Ukraine’s (with its Russian-speaking east, with Russia annexing Crimea, and then with its more European, Ukrainian-speaking west), you have a built-in cultural schism for your enemy to exploit. That starts to look pretty similar to what the Internet Research Agency does when it starts taking out Facebook ads, and creating social divisions around Black Lives Matter, or even ads promoting Texas secession. If you look at some of these ads, they’re pretty hilarious. But they worked.

So you see the Russians quickly excelling at the information-dominance components of cyber warfare. We lag pretty far behind on that. We excel at figuring out cyber attacks of a more physical type, like what we did against Iranian nuclear facilities. But information warfare doesn’t fit terribly well with our democratic ideals.

Here in terms of our own media’s and of social-media’s again overlapping roles and complicities in contemporary hybrid war, if we start from your incisive account of Russia’s Internet Research Agency successfully transforming and weaponizing social-media platforms in profound ways, in a remarkably compressed time-span (redirecting these outlets from facilitating the spread of pro-democracy movements, to inciting disagreement, fraying social bonds, driving polarized communities ever further apart), and if we then bring in the press’s role in pouncing on so much 2016 election-coverage “catnip” (unwittingly amplifying Putin’s capacities to outshout any more constructive public debate), I can’t help recalling the press’s anguished soul-searching after, say, Judith Miller and other reporters were seen to have been lured into abetting the George W. Bush administration’s disinformation campaign leading up to the 2003 Iraq invasion. Within that slightly broader historical context, what lessons does the press still need to absorb much more thoroughly about not having its own appetites and inclinations weaponized?

2016 seems to me very different from the mistakes made in the run-up to the Iraq War. Back then, the problem was the government mischaracterizing its intelligence, and the inability of our reporters (because of so much material being classified) to figure out where the points of dissonance were, what the holes in that intelligence were. We eventually got there. Unfortunately, we got there after the war already had begun.

In the case of the 2016 election, we could verify in real-time that somebody had stolen all these emails that then started circulating. We could attribute this theft to the Russians quite early on. The United States government didn’t release its first statement on Russian culpability until October 7th — and only later acknowledged Putin’s direct involvement. But by July the New York Times reported that the CIA had concluded with high confidence that the Russians (specifically the GRU, the Kremlin’s military-intelligence unit) had launched the DNC hack, as ordered by Putin himself. Still when the press did report on all this catnip from the DNC leaks, we failed to keep highlighting for readers that they were only reading this material because the Russians wanted them to read it. We could have, and should have, done better with that.

Your book states that we have “almost no evidence” of Russia actively hacking ballot machines on election day 2016. I’d love to hear more about what “almost” means here. But more generally, The Perfect Weapon’s account of Russia “probing” so many state election networks (at least three dozen) leaves me wondering what sorts of operative implants these probes might have left behind. Or the continued complacent public rhetoric about our off-line ballot systems being unhackable leaves me wondering how to reconcile these claims with The Perfect Weapon’s careful documentation of the U.S. government itself pioneering the hacking of off-line systems at least a decade ago. And perhaps most pressingly, the enhanced incentive Russia and everybody else must now feel to manipulate our elections without threat of serious consequences compels me to ask: what does Russia’s 2016 intervention show us about how we need to shore up our basic democratic functioning (both for elections, and long before election day), and how to learn/apply these lessons (say in 2018/2020) without just falling into the familiar paradigm of building our defenses around lessons learned from the last war?

So we have to keep in mind both short-term and long-term lessons. Of course we already should have been responding by the time of President Trump’s January 2017 inauguration. And you can imagine a parallel universe in which the victorious president uses that occasion to say: “My fellow Americans, I believe I was legitimately elected. We have no evidence of people tampering with the actual ballots. But clearly the Russians attempted to get into our systems, and we need to understand every possible aspect and implication of that attempt. So as my first act as president, I’m appointing a 9/11-style commission. They will report back on an interim basis before the 2018 midterms, and they certainly will provide an extensive list of recommendations to strengthen our election system well before the next presidential election.”

Of course Donald Trump didn’t say that, because he couldn’t separate in his own mind the reports of Russian hacking and the legitimacy of his victory. So instead Trump created a commission (a commission that collapsed very quickly) to look for the alleged three million missing votes. We lost a huge opportunity to learn lessons — which we had done pretty well after 9/11, or after the Challenger and Columbia explosions.

So this leads into your question about what are our vulnerabilities, and what needs to change. I see three separate especially pressing vulnerabilities. The biggest risk is in the voter-registration system, because, by its very definition, that system should be outward-facing. We want to make it easier for people to register. We want to reach out to them online. And that of course leaves this system extra vulnerable to manipulations. The most serious probes we saw in 2016 happened with the Arizona and Illinois registration systems, and other registration systems were also probed.

Our voting mechanisms do remain, by and large, offline. So far we’ve only really discussed putting them online in remote parts of Alaska, and for military personnel living abroad. Anywhere else, you still use a machine that does not connect to the Internet. But as you mentioned, this fact leads to a lot of people saying “Great, we’re safe,” to which I’d reply: “Not so fast.” Ballots themselves get put either on USBs or on little memory cards. And we design ballots differently for every state, city, neighborhood. You of course have your local, and city-wide, and state-wide elections. Each of those ballots gets put together separately, often probably on a laptop (often probably connected to the Internet), and then put onto a memory card which then gets put into the voting machines.

I have attended demonstrations in which, even after someone alters the machine coding so that it looks like candidate A did not win over candidate B, you can go back and calculate the correct results. But then a third vulnerability comes from the reporting system through the Associated Press, which everybody uses for election results. And overall, a few simple preventative steps come to mind. Every ballot should produce a paper backup. Most states do have a paper backup. But states like Pennsylvania and Georgia have it only in certain parts of the state. If I was king for a day, I would say that if a machine does not produce a paper backup, then we cannot use it in an American election. If you do have suspicions, it would take a while, maybe several weeks, but you still could go back and retabulate by paper. These paper backups should stay in the polling place, and we should have periodic sample-audits done in each polling place to confirm that the electronic numbers match the numbers from paper ballots. If any disparity arises, then somebody should start a broader review.

Given the potential for some obvious fixes, both with elections and other digital operations, it might help for us to start addressing muddled U.S. government responses to the threats we’ve been discussing. For technical obstacles that any effective U.S. response must overcome, we could start from the fact that the most secure, stable, safe scenario (in which our hardened defenses deter would-be cyber attackers from even trying) is, if possible at all, still decades away. At the same time, aggressive cyber retaliation brings its own vulnerabilities, opening new prospects for enemies to track, copy, target, even redeploy one’s own weapon systems. And needless to say, American decision-makers face any number of legal and political quandaries many of our adversaries can set aside from the start. American officials (again for national-security reasons) often can’t offer evidence legitimating their need for defensive retaliation, and/or often can’t acknowledge the retaliatory measures that they do take — thereby exposing themselves to public criticism for an apparently excessive and/or an apparently weak response, and all while failing to deter other potential attackers. And given all these calculations and constrictions, I can’t help seeing cyber not as leveling the playing field, so much as actually giving the asymmetrical advantage to rogue states and terrorist actors. Again The Perfect Weapon does offer a sharp contrast between spectacular terrorist scenes like the September 11th attacks, and the semi-visible, partly psychological, gray-zone domains of hybridized and cyber war we’ve witnessed in Ukraine. But how have you seen, or might we all soon see, terrorist and rogue-state actors invent their own distinctive modes of gray-zone manipulations and calculated antagonisms?

So far nonstate actors have not made terribly sophisticated use of cyber. ISIS mostly has used it for recruiting (and even that turned out to be devilishly difficult to defeat, because you can take recruiting data and put it up in the cloud — so that if the NSA comes along and takes you down, you have copies secreted around the world to get back into business). Terrorists tend to move around a lot, and can have a hard time focusing, but cyber attacks take weeks or months of practice and patience. So one big blessing until now has come from us not having to face many quick, fast strikes. They take a year or so to set up. You have to patiently find a way into companies’ or nations’ computer networks, and start understanding the relationships.

But we definitely should anticipate increased cyber attacks from terrorists and nonstate actors in the future, just as we should expect them to get more adept with drones. The North Koreans (obviously a dirt-poor country, but investing to make themselves pretty talented at this) used cyber to steal from the Bangladeshi Central Bank, and would have stolen a billion dollars had they not made a spelling mistake that resulted in getting caught (after taking only about 86 million dollars). They’ve used cyber against Sony, against hard infrastructure. They’re learning all the different ways in which this is such a useful tool to have in the toolbox. And if the North Koreans can do it, you have to conclude that almost anybody can do it.

And how might nonstate terrorists likewise absorb, again from Russian precedent, the lesson that the galvanizing and potentially unifying fear/anger generated by high-profile attacks may not prove as effective as some more subdued cyber campaign? When I think back, for example, to the BP oil-spill disaster, or the flailing Obamacare rollout, I sense how a few well-targeted, well-disguised interventions could eviscerate confidence in government so much more acutely than any more dramatic assault — particularly if your primary goal is to undermine your adversary, rather than to terrify your adversary.

That’s right, and that’s what the Russians did so successfully in the 2016 election — not only because the Russians now can come back at some point (which I have no doubt will happen), but because 2016 taught such a striking lesson to so many other actors. One important characteristic of cyber is that everybody in this domain watches everybody else, and learns from what everybody else does, and learns from their mistakes. So one of the most devastating developments during the last decade came when the United States lost some of its own cyber weapons to Shadow Brokers, a group we believe to be Russian in origin, which now has the source code for some of America’s most potent weapons, which were stolen from the TAO (Tailored Access Operations) team of the National Security Agency. Then following these types of hacks of some very sophisticated delivery vehicles, you see somebody else screw onto these vehicles some not terribly sophisticated payloads, and you get North Korea’s WannaCry attack taking down most of the British healthcare system in 2017. That sequence shows again how this all proliferates, because once the code gets out, you just need the ingenuity of a good group of programmers to take up a known vulnerability and exploit it.

Your book also mentions some shadowy second Edward Snowden figure. Did that subtheme eventually merge with the Shadow Brokers operation, or does a separate story still need to be told on this topic?

Well as the book describes, two NSA contractors got arrested.

Right. That all seemed maybe not to add up to this second Snowden.

The material they took, apparently for their own purposes, may well have been the material the Russians eventually got. And whether the Russians got this material from these contractors putting it onto insecure systems, or whether the Russians somehow learned, from the examples of these contractors, of a vulnerability that they themselves could exploit, is something I don’t yet fully understand. I don’t think many people do understand it. We don’t believe these contractors took this material with the intention of making it available to hostile powers.

Amid this messy and murky proliferation, we probably should talk a bit more about deterrence. Your book offers the clear and resounding case that we need much more robust public discussions about which types of attacks, directed at which sectors of our society, justify which types of responses from which government agencies and/or private entities. You argue quite persuasively that until America more openly acknowledges its present capacities, our democracy cannot debate sufficiently (in a domestic context) whether and how to maintain/enhance/utilize/regulate these capacities, and our government cannot call for equivalent international discussions without facing charges of shameless hypocrisy. You also point out localized impediments to constructive discussions: with U.S. defense and intelligence agencies perennially secretive about technological innovations, with the especially secretive intelligence community (often preferring long-term infiltration to any more dramatic utilization) increasingly central to cyber initiatives, and with certain foreign governments’ calls for greater digital transparency designed more to dominate their domestic populations than to establish a framework for international peace. And then in terms of us arriving at the type of transparent (enough), trusting (enough), consensus-building discussions that The Perfect Weapon calls for on deterrence questions: again, how to reconcile this call for rational collective decision-making with an era of gray-zone conflict in which so few of the preconditions for constructive public and/or diplomatic conversation actually apply?

You’re right. We’ve got a bunch of different challenges here. First we have the pervasive secrecy surrounding cyber, in some ways even greater than the secrecy that surrounded nuclear. There has been this knee-jerk reaction in the intelligence community that any discussion of our cyber capabilities makes us more vulnerable, that any discussion of how we should respond telegraphs to the enemy what to expect, and helps them to calibrate their defenses. I’m fully sympathetic to this idea that you need to keep some doubt in an adversary’s mind. But I also believe that our excessive secrecy surrounding cyber makes it much more difficult to deter.

We’ve been reluctant, for example, to identify countries that attack us. People remember the Russians attacking various American institutions involved in the 2016 election, but they don’t remember the Russians attacking the White House’s unclassified systems, the State Department’s unclassified systems, the Joint Chiefs of Staff system — long before they went after the DNC. And our reaction to those previous attacks was basically zero. We did not publicly name the Russians. We did not make them pay a price. So if you’re Vladimir Putin, you have to come to the conclusion that if they’re not going to protect the White House, the Joint Chiefs, the State Department, then who’s going to care about the Democratic National Committee (essentially staffed by college kids)? So Putin came to a very reasonable conclusion based on what had and had not happened.

Then you reach the next phase of secrecy, in which we don’t want to sign up to rules that could restrict presidents from taking steps that the intelligence agencies might recommend. So suppose you and I sat down and said: “Okay, we’ve seen the cyber attacks against the United States (and sometimes originating from the United States). Let’s come up with a list of where we should have international understandings or norms on what remains off limits.” What should we put on our list? Election systems? Hospitals? Nursing homes? Let’s say we come up with 30 items, and then we take this list to the intelligence community, and they say: “Look, before we sign up for this, remember that the United States interfered in elections in Italy in 1948, Japan in the 1950s, Latin America in the 50s and 60s. We may want to give our presidents that option of interfering in the future if the alternative could be war.”

Or what about electrical grids? The U.S. had a plan, described in this book, called Nitro Zeus, which would have taken out entire networks in Iranian cities if the nuclear deal had not come together and if we had reached a military conflict. So again, do we want to sign up for something that would forever forbid American forces from using certain cyber weapons, especially if we thought those weapons might allow us to avoid a full-scale war?

But then for one additional question: do you want to leave these decisions entirely in the hands of government officials, or do you want the kind of public debate that we had in the nuclear era? And remember, with nuclear, we ultimately arrived at a very different position from where we started. In the 50s, General MacArthur wanted to use nuclear weapons against the North Koreans and the Chinese. By the 70s and 80s, we’d concluded that we would only use nuclear weapons as a matter of national survival. Going forward, we clearly will have to use cyber a lot more often than we do right now, but if we want others to abide by some sorts of norms, then we ourselves will have to accept giving up a bit of flexibility, and acknowledging a bit more openly our capacities.

I’ve mostly asked about U.S. governmental policy, projects, responses. But your book makes clear that we cannot think of U.S. cyber strengths or vulnerabilities or strategies so monolithically. The Perfect Weapon describes not only how our diversified public/private society differs from more all-pervasive state/military/commercial apparatuses in Russia and China, but also how fraught relations between the U.S. government and U.S. firms differ at present from the predominantly cooperative, synergistic public/corporate relations (basically what Eisenhower coined the military-industrial complex) prevailing during the Cold War. The Perfect Weapon notes complex public/private entwinements in which one-third of Americans granted top-secret credentials actually work for private firms, with contractors like Edward Snowden included among the ranks, with Snowden’s leaks not only exposing U.S. governmental operations and hypocrisies, but posing existential threats to various computing and communications firms seeing individual customers’ and foreign governments’ trust of their supposedly secure, non-compromised, state-neutral products and services fundamentally diminished. If you had to bring tech-company executives (and workers) and government officials into a room right now, and help them figure out how their most crucial relationships ought to be recalibrated going forward, what would be the broad outlines of your approach? And how might the “Sputnik moment” we now face, with massive if semi-clandestine Chinese investment/infiltration within Silicon Valley, combined with China’s intensifying ambitions for tech dominance, factor into your pitch?

First thing, even before you call the meeting: we do have to understand how today’s structure differs radically from what he had in the Cold War. During the Cold War, some American firms (Raytheon, Lockheed Martin, the list goes on) focused entirely on supplying the U.S. government with weaponry, software, intelligence. Overwhelmingly their biggest clients were the Pentagon and (to some degree) the intelligence agencies. Today, for major companies like Google, Facebook, Microsoft, and Twitter, their government business is pretty minimal. Amazon runs the CIA’s cloud service, but by and large these companies operate in the private sector. So most importantly, they must show their international customers (because most of their customers come from outside the United States) that they are no pawn of the U.S. government.

Lockheed Martin and Boeing and related companies never had to worry about that question. Everybody assumed their direct connection to American Cold War projects. But today’s employees at, say, Google, might object to participating in artificial-intelligence programs and so forth run by the Pentagon. These companies cannot so simply sign on to help the United States create the next generation of weapons. The specific Google objections mentioned in this book actually came from a pretty small project involving future drone technology.

So when you get this meeting together, you first have to acknowledge that very different overall climate. Maybe we need spinoffs from these tech companies, which do purely defense work, completely separate from their consumer businesses. But will these companies commit to that? Or how much transferrable talent would it take for the U.S. government to run such projects by itself? And of course, even then, the U.S. government would be pretty slow at it and tentative. So to what degree does our government have to scale back expectations on what the private sector will do for it? And on the other side, what will it take for our private sector to recognize that they’ve only had the ability to pursue this kind of path-making, path-breaking research because they live in a country with capitalist freedoms protected by the government — quite different from the type of government research-and-development presence that you would find in Japan, China, Russia. Therefore, I would try to make the case that our tech companies owe something to this system that has allowed them to flourish. I’d tell them to think long and hard when the Chinese come along and ask them to turn over their technology in order to operate in China, or to store all their data in China so that the Chinese can grab it without much legal due process. But similarly, if our government simply assumes we can go back to an old Cold War model: we can’t. And until you get that type of recognition on both sides, that conversation in the room won’t be terribly productive.