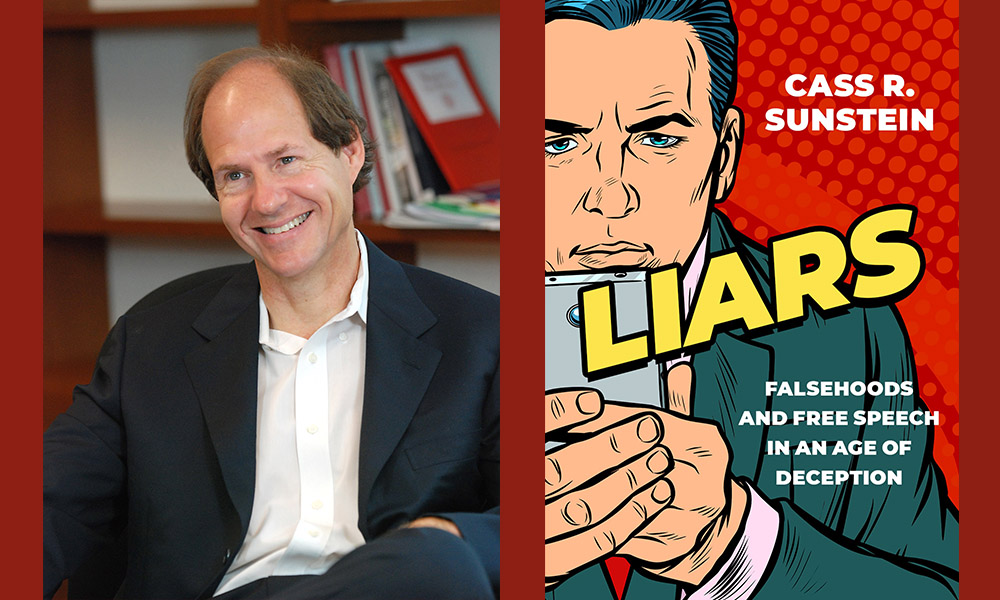

Where might the “universe of regulable falsehoods” have more variety than it might first seem? Where and why should we nonetheless protect a wide range of falsehoods? When I want to ask such questions, I pose them to Cass R. Sunstein. This present conversation focuses on Sunstein’s book Liars: Falsehoods and Free Speech in an Age of Deception. Sunstein is the Robert Walmsley University Professor at Harvard Law School, where he founded the Program on Behavioral Economics and Public Policy. From 2009 to 2012, Sunstein served as the Administrator of the White House Office of Information and Regulatory Affairs. In 2018, he received the Norwegian government’s Holberg Prize, often described as the equivalent of the Nobel Prize for law and humanities. His recent books include Can It Happen Here?, The Cost-Benefit Revolution, How Change Happens, and On Freedom. Our 2020 conversation about Conformity: The Power of Social Influences can be found here.

¤

ANDY FITCH: Could you first sketch the prevailing Supreme Court approach of (and some intellectual antecedents for) tolerating a broad circulation of false information, if only to promote robust free speech?

CASS R. SUNSTEIN: Sure. I’ll start with the 2012 United States V. Alvarez decision, where the Court basically said: “We don’t want to empower any Orwellian Ministry of Truth.” In practice, this means: we won’t regulate falsehoods just for being falsehoods. For this particular case, somebody falsely claimed to have won the Congressional Medal of Honor, in violation of the Stolen Valor Act. But the Court more or less said: “Well, you have various available options for protecting the interests of our democratic society, and for promoting the truth. You can publish an official list of medal recipients. You can publicize the winners in other ways. You can engage in counter-speech to disprove this claim. But you can’t ban someone from making this claim. We have no limiting principle like that.” And that notion of protecting falsehoods has its own antecedents, most directly the 1964 New York Times Co. V. Sullivan decision, which declared that libelous statements about public figures receive a broad measure of protection. So long as the speaker was merely negligent, or just made an honest mistake, libel in these situations remains protected speech.

We also have a longer and complex Court history of giving protection to falsehoods. Many people know as the defining example of speech that doesn’t receive First Amendment protection Justice Holmes’s famous statement that you can’t falsely cry “Fire” in a crowded theater and create a panic. That brisk but brilliant example offers something false, knowingly false, harmful, and imminently harmful. The Court has frequently cited that example, and has never taken it back.

Still we do have some lack of clarity, even after Alvarez, on what separates the false cry of “Fire!” in a crowded theater from the lie about winning a Congressional Medal of Honor. That scene in the theater stands out as more imminent, and less fixable through counter-speech, than does say a false claim made in the context of a political campaign. But other less imminent forms of speech can also be regulated. False commercial speech (say if you claim your product will cure cancer, when it won’t) can be considered fraud and can be regulated. Perjury is a felony. If you tell the FBI something untrue about yourself or about somebody else (or if you pretend to be an FBI officer, and solicit information from others), you can face criminal charges. So the universe of regulable falsehoods has more variety than it might first seem, which in part motivated me to write this book.

Liars affirms the basic Court logic for protecting a broad range of speech, but also describes that logic as “awfully abstract” at times, particularly in the face of certain 21st-century challenges to effective democratic self-rule. Could you outline some of today’s most pressing external forces (say coordinated disinformation campaigns by foreign adversaries) and internal forces (say dysfunctional social-media dynamics) that have prompted you to qualify abstracted free-speech claims?

When I first began putting this book together, I actually thought of it as a kind of manifesto in favor of regulating damaging falsehoods. I started with that original impetus. I considered working on the book with a commercial publisher, who loved the idea of an all-sirens-blazing account of the need to regulate falsehoods. But when I thought long and hard about whether I had the goods to deliver that kind of manifesto, I just wasn’t sure. And those unresolved questions made me feel more comfortable working with an academic press, with Oxford, who actually wanted me to try to figure out something.

Then as the book progressed, it became much clearer in my head (and on the page) why in fact our general protection of falsehoods remains a really good idea. With John Stuart Mill’s great “On Liberty” essay in mind, for example, I could recognize why, if someone makes a mistake about climate change or a political opponent’s policy, we don’t want to threaten them with punishment. We can’t trust public officials always to know and to dispense the truth. We can’t trust our legal system always to make infallible or even accurate judgments. If you might get punished for saying something false, then you might find it safest to shut up a lot, even when you have something true and useful to say. You might never learn what your fellow citizens really think (again, regardless of whether it’s true or false). You might never receive the benefit of needing to strengthen and clarify your own argument in response to theirs.

Taking all of this into consideration, I had to admit that these time-honored arguments for less-regulated speech still had more force than I’d originally sensed. But I also realized, as your question suggests, that those somewhat abstract arguments need some very contemporary updates, in view of the urgent necessity to protect our democratic system against certain kinds of falsehoods — and all as we maintain our commitment to a form of expressive freedom that includes various falsehoods and even lies.

To claim that our democracy requires breathing room for falsehoods doesn’t justify every falsehood. To post online that not wearing a mask will greatly reduce your likelihood of catching COVID (because particles trapped in masks cause COVID — or something clearly false like that) can itself cause harm. So how should we think about less high-minded and abstract scenarios, where leaving breathing space for speech also means leaving room for real harm? If somebody falsely claims their political opponent spent five years in jail for a sex offense, then we have to think about the consequences for our democracy, as well as for individual reputations. That puts Sullivan back on the table, and maybe in some sense back in play. Whatever philosophers say about autonomy or the marketplace of ideas sounds a little tinny in that context.

Still in terms of socially corrosive statements, how should the Court likewise update its thinking when it comes to forms of “positive” misinformation, or impersonal ideas-oriented misinformation, again where libel concerns often don’t apply? What about somebody saying, for example: “Obviously I won the 2020 election in all 50 states”?

Well it might sound counterintuitive at this moment, but definitely for what public officials say (even about elections), we should want our government to give a wide berth and protect their freedom of expression. Now, how private companies choose to respond to that kind of false statement opens up other questions. But government needs to grant much flexibility. And for government, I see the right framework as having four moving parts. First, did the person knowingly make a false statement (which could call for regulation more than a mere mistake)? Second, does this statement cause a modest harm, or a great harm? Third, should we consider that harm likely, or merely conjectural? Fourth, is that harm imminent, or something that would play out in the long term? You could have a ranking of, say, zero to four for each of those criteria. And that combined score can tell us how strong of a case for regulation we have.

In most cases, for example, a politician’s false claim about winning the election might be hard to prove as a deliberate falsehood. And even if you could prove that, we probably couldn’t expect high-probability egregious harm in the immediate short term, before anyone can offer corrective counter-speech. Though 2021’s first weeks of course have raised questions about all of that.

Questions of regulation bring us to a broader range of potential responses to false statements: from forms of punishment, to forms of censorship, to forms of corrective disclosure, to forms of recalibrated choice architecture in how digital speech gets distributed. Again, could you lay out the sometimes overlapping, sometimes competing merits of these various approaches, depending on the particular context? And could we bring in questions of who (government authorities, corporate platforms, concerned citizens) should initiate which respective responses?

That takes us to one of the most exciting developments in generations for the theory and practice of free speech. Today’s online platforms already respond to certain falsehoods in ways governments couldn’t even have contemplated before. They just didn’t have the technology. But now governments might want to piggyback on (or to mandate) some of these creative tools used online.

So in terms of a rough hierarchy of responses, government has the most severe option of criminalizing behavior or speech, and maybe deciding (at least in a tolerant liberal democracy like ours) to charge you with a misdemeanor. For a second, less severe approach, government (either executive authorities or courts) could impose a civil penalty — no jail, no criminal charge. Third, government could require some sort of disclosure, say an explicit retraction (“We published this false statement, which we now take back”), or a warning label (“FALSE,” or “DISPUTED,” or, “For other perspectives, see X”). Right now we see from Twitter and Facebook a combination of these disclosure approaches.

And then for a fourth option, having to do with choice architecture, the social-media platforms could start reducing circulation of certain false statements — making them less prominent in newsfeeds. These statements might never get taken down. But a whole lot of people won’t see them. Or some other kind of friction might get introduced. Before you like or share a post and help it spread, you might have to see (or maybe even click on) some counter-speech example likely to have an effect.

We’ll need a lot of empirical study to sift through the effects and the magnitude of these different approaches. But in a short period of time, regulators’ assortment of tools to consider has expanded greatly. And the possibility for focused, freedom-respecting tools makes the use of such tools much more appealing. So here again, some of those more abstract distinctions between either banning speech or permitting unregulated speech might need further updates.

What could it look like, in a couple representative situations, to cultivate “optimal chill,” in terms of impeding the most harmful, least useful, least correctable falsehoods — with minimal (though admittedly not zero) impact on less significant falsehoods and gropings for truth? And again, which players in government, in corporate sectors, in civil society, should play which roles in cultivating such optimal chill?

So suppose I have a product I know doesn’t help with heart disease. I know it actually raises risks of heart disease. I know this. But I also know that my population of potential purchasers will really take to buying my product if I make misleading claims about its heart-healthy features. With no restrictions placed on my claims, I might have the incentive to lie. But with the implicit threat of Consumer Reports publishing an article countering my claims, I’d face a different calculation. And with regulatory guidelines that only allow me to call this product heart healthy if that claim is demonstratively true or at least arguably true, that takes us even closer to optimal chill.

For another example, we might want to chill people who have shown themselves willing to make false and devastating claims about other private individuals on Twitter and Facebook. That probably makes sense. Though then this gets harder when we come to the optimal chill for falsehoods about public figures. We might still love Sullivan. But we need to recognize it as a dinosaur. That doesn’t necessarily mean it should go extinct. But we can do better.

And again, creating optimal chill doesn’t have to mean bringing in stiff new penalties. Maybe to prevent certain harms to our fellow human beings, to our brothers and sisters who otherwise can have their reputations ruined by somebody who doesn’t bother fact-checking his statements, we say that this false speaker will have to face the chilling effect of owning up to having made a negligent and needlessly harmful statement. Maybe if this speaker says something demonstratively false, he’ll have to pay one dollar in damages — but he’ll also have to admit he said something false.

When I ask who should take on which responsibilities, I also have in mind the more pointed question of if/when you see potentially problematic consequences to already dominant digital platforms (already facing escalating accusations of abusing this dominance in predatorial and socially detrimental ways) now taking on further quasi-state capacities. And a broader question here might ask if/how you see economic redistribution and antitrust regulation as essential complementary pillars to robust free speech. In what ways (if any) does reinforcing robust free-speech protections by necessity involve promoting economic, political, and technological equality?

Good question. For me, with these kinds of big topics, I try to compartmentalize things a bit. So, for example, what should we do about falsehoods — holding everything else constant? I’ve spent a lot of the last year on precisely that question. Then separately, do we have significant violation of our antitrust laws today? Or do we need to rejigger those laws in response to new technologies? I’m actually still agnostic on these topics, and I don’t have much antitrust expertise.

On economic equality, I definitely think that we need much more of it. A more progressive income tax seems a very good idea. More help for people at the bottom of our economic ladder seems an exceptionally good idea. Taxing the rich seems to me good, and helping the poor seems to me great. But whether regulation of social-media giants will help move us towards more economic equality again seems to me not very clear.

Many people who want aggressive regulation of Google and Twitter and Apple might also want more economic equality. But I wouldn’t prioritize aggressively regulating these companies as our first or best step towards getting more economic equality. Instead, I’d emphasize a more progressive income-tax system, a larger and more broadly available income-tax credit, more spending on education and on making college more accessible — and so forth.

Finally then, alongside new tech capacities to amplify false claims, how should jurisprudence on speech factor in contemporary behavioral-science findings? What challenges to Mill’s conception of a truth-optimizing marketplace of ideas do you take, for example, from research indicating that false information spreads faster and often sticks more enduringly in human brains? Or given our species state of meta-cognitive myopia, and our inclination to absorb unmediated signals, rather than to sift through background context (particularly when the signals sent convey a sense of urgency, surprise, disgust), how much can we rely on corrective statements or links to offset the societal harms posed by digitally distributed misinformation?

I do believe that we should prioritize what we’ve learned over the past decades about how the human mind works. The legal system really hasn’t done this yet. The most dramatic and relevant findings show that, for a certain range of us, if somebody tells us something false, and even if we’re simultaneously told that this statement’s false, we still can’t help remembering it after the fact as true. Or many of us certainly will wonder whether it might be true, which does suggest that corrections have a limited and imperfect effect. This can strengthen the argument for taking something down, instead of simply countering it. Or maybe people first should see the evidence that a statement is false, before they even see the statement — rather than reading a correction after the fact.

With respect to false claims about Hillary Clinton’s emails, or about Democrats stealing the Pennsylvania election, I still would recommend giving a wide berth for freedom of expression. But we do need to recognize that the corrosive effect of such lies might be much worse than we had assumed. Even people who doubt in real time President Trump’s false claims about the election might still be swayed. Or a behaviorally informed hunch would suggest that a large number of Hillary Clinton’s supporters might actually feel alarmed in some part of their mind, even if on reflection they can see that statements about her emails’ criminality were baseless.

And I guess we’ll need to discuss next time how much we can expect today’s platform firms to depart from a business model largely fueled by luring and hooking users through shocking and exciting and arresting speech.

Right, this book’s acknowledgments do offer the disclaimer that I’ve worked a bit (not for more than three days total, over the past six years) as a consultant for Facebook. But I know that a lot of what I say in here Facebook won’t particularly love.