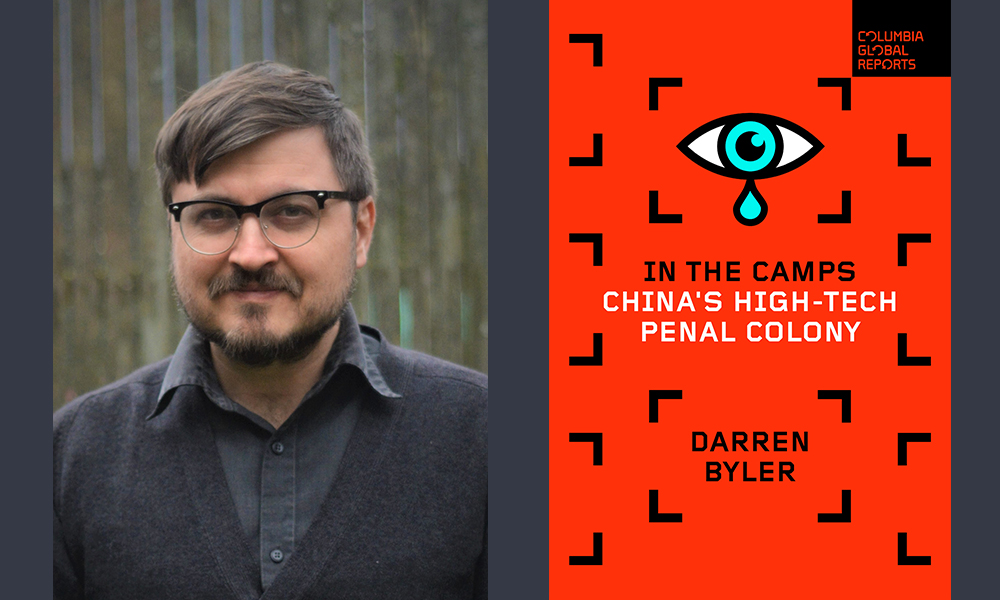

Darren Byler is an anthropologist and Assistant Professor of International Studies at Simon Fraser University. In his forthcoming book, In the Camps: China’s High-Tech Penal Colony, he presents the dehumanization that results from the Chinese government’s reeducation camps in the northwest part of the country. The bleakness of the situation leads Byler to consider the differences and similarities between them and Primo Levi’s depiction of social death in Nazi concentration camps.

Byler’s book is powerful because he interviews former detainees and camp workers from the region of Xinjiang. Many had permanent resident status or citizenship status in other countries before being detained when they returned to China in 2016 and 2017. Some managed to secure their release thanks to pressure from foreign governments. Despite risks to themselves and their families living in China, they spoke to Byler so the world could bear witness to their stories.

Byler focuses on how the Chinese government uses surveillance technologies to maintain control in the camp system. Since the detainees are test subjects for product improvement, Byler contends the companies making the surveillance software developed highly competitive technologies and consumer products. International desire for economic profit, Byler argues, is a leading reason why the world is turning a blind eye to human rights abuses.

My conversation with Byler has been edited and condensed.

¤

What’s happening in the camps, and why is it occurring?

The camp system is part of a more extensive policing campaign aiming to prevent relatively rare instances of political violence, framed often as terrorism, and create a safe environment for the Han Chinese settler population that has moved into Uyghur ancestral lands over the past two decades. The “reeducation camps,” as they were commonly referred to when I last visited China in 2018, are spaces where Muslims who are deemed “untrustworthy” due to religious or political thoughts and actions are sent to be reformed. The so-called reform occurs through a systemic process of psychological and physical abuse.

How are surveillance technologies used to maintain control in the camps?

Detainees from across the region describe the camps using highly sophisticated camera and checkpoint systems that monitor movement. In some cases, the cameras appear to be motion-activated. In others, they used facial recognition. The cameras are deployed to force detainees to sit ramrod straight on plastic stools for many hours in their cells while watching political speeches on TV. If they get up without permission, a guard yells at them through an intercom. Guards tell them cameras will detect if they’re speaking to each other. As the manuals for the camps put it, the surveillance has “zero blind spots.”

What companies provide the surveillance technologies, and how do they justify their relationship with the Chinese government?

Hundreds of companies provide the technologies. National-level state contractors, such as the China Electronics Technology Corporation and its subsidiary HikVision, provide the bulk of services, hardware, and software. Large corporations, including Alibaba, iFlytek, Megvii, Sensetime, Yitu, and Meiya Pico, justify accepting lucrative counterterrorism contracts as part of their patriotic duty. This rationale parallels Amazon and Google’s justification for supporting US military and policing systems. However, research shows that projects which draw on state surveillance data help technology companies develop new intellectual property while extending their market share. Long term profit and competition, rather than patriotism, are thus also factors driving companies to do this kind of work.

You argue surveilling camp prisoners, using them as test data, creates technological “improvements” that spread to the rest of the world. What’s happening that leads you to conclude, “behind Seattle stands Xinjiang”?

The global economy is deeply interconnected. In this sense, you can say that Xinjiang stands both behind and in front of Seattle. As I describe in detail in the book, some Xinjiang face recognition systems were partly trained by Seattle-based engineers on a dataset from the University of Washington. In the mid-2010s the former cofounders of Microsoft Research Asia, often described as the “national military academy” of China’s information technology industry, became key investors and executives of a computer vision company called Megvii. By 2017, Megvii, which had a research wing staffed by UW graduates near the Microsoft campus, was providing automated surveillance to police departments across China including Xinjiang. Over time and due to the fidelity and symmetry of Chinese policing data, the algorithms become extremely precise. Now, these algorithms are marketed by Megvii, and other tech companies also founded by Microsoft alumni such as Yitu and Sensetime, as part of policing systems in as many as 100 countries.

Are you saying that detainees are used as guinea pigs for product development?

Yes, detainees and Muslims who are not yet detained are viewed as undeserving of civil rights protections and as a site of investment. This is why they’re made the target of cutting-edge technologies that attempt to reform their behavior. To be more precise though, the product development pipeline is an opaque process.

Absent internal company information, the best researchers can do is make inferences. You look at what applications these companies produced alongside and after developing their security products. In some cases, the dual uses are quite close. For instance, camera companies like Dahua have drawn on Yitu algorithms to develop nearly $1 billion dollars of “smart camp” systems in Xinjiang. At the same time, they have developed “smart campus” solutions used to track student behavior in elementary schools in eastern China and they have also assisted Amazon in tracking the spread of COVID-19 using heat-mapping camera systems in Amazon facilities.

You contend the pandemic created conditions that made it convenient for the rest of the world to “forget” about their human rights objections. What’s incentivizing this attitude?

In a global crisis, the ethical concerns that arise from trading with companies whose technologies were partly developed by experimenting on interned Muslims appear to be ignored or forgotten. Powerful tech firms like Microsoft and Amazon are unwilling to bear the cost of confronting their complicity in these systems, and regulators and consumers are unable to hold them to account.