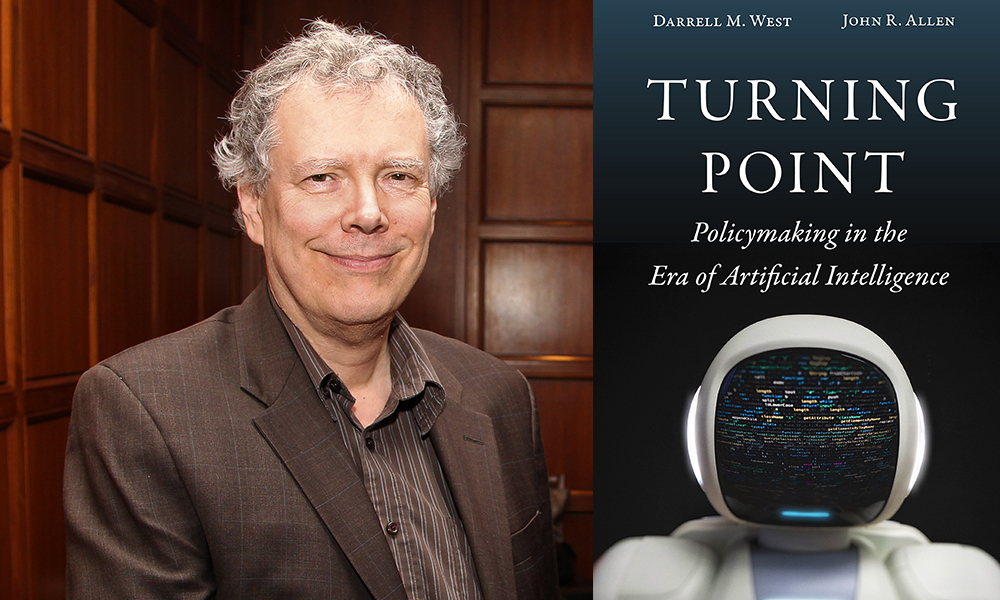

Why will implementing AI technologies require redesigning our whole infrastructural, occupational, and cultural ecosystem? Why can’t we think of today’s technological developments as just a technical matter? When I want to ask such questions, I pose them to Darrell M. West. This present conversation focuses on West’s book (co-written with General John Allen) Turning Point: Policymaking in the Era of Artificial Intelligence. West is Vice President and Senior Fellow of Governance Studies at the Brookings Institution. Previously, West was a professor of Political Science and Public Policy (and Director of the Taubman Center for Public Policy) at Brown University. His current research focuses on American politics, technology policy, and artificial intelligence. West has written 25 books, and has won the American Political Science Association’s Don K. Price award for best book on technology (for Digital Government), and the American Political Science Association’s Doris Graber award for best book on political communications (for Cross Talk).

¤

ANDY FITCH: Your book focuses on artificial-intelligence applications within specific sectors, rather than on reductive predictions of whether AI will lead to utopia or dystopia. But if we first assume the potential for tremendous productivity, efficiency, and capability gains across any number of fields (alongside equivalent dangers of amplified disparities, exacerbated social divides, and threats to our national security and international competitiveness), could you trace the contours of what an effective “whole of society” approach to responsible AI development should include? Why does robust AI adoption mean ramping up not just technological innovation, but wide-ranging policy visions, regulatory actions, legal liability, corporate self-policing, and informed public engagement?

DARRELL M. WEST: AI is the transformative technology of our times. Applications have appeared in virtually every sector — from healthcare and education, to transportation, e-commerce, and national defense. John Allen and I wrote this book to track those varied uses, and to consider AI’s broader societal and ethical ramifications. We advocate a whole-of-society approach because AI really disrupts everything. It affects how people get information, how they share data about themselves, how they buy products, and how the country defends itself. With such a widespread revolution, we need to make fundamental changes in how we think about our policy regimes.

The technology has advanced to the point where we could move in fact towards utopia or dystopia. The most crucial variable in determining how we navigate that turning point is public policy. Historically, the US has done a good job of embracing and harnessing new technologies to maximize people’s benefits while minimizing the downside. We need to think about policy changes that will keep humans in charge of artificial intelligence.

Could you flesh out a bit why an equitable, inclusive, forward-looking AI approach would need to prioritize transparency (say in AI decision-making processes), accountability (say in algorithmic designs and operations), and robust public scrutiny (amid what so far largely has remained a realm of “permissionless” corporate innovation)?

Given the potential dangers (such as creating even larger divides between the haves and the have-nots), we consider it critical to develop an inclusive model for AI applications. We believe this technology has strong potential for benefitting society as a whole. But in order to reap these benefits, we need to make it more transparent and accountable, and have more public scrutiny of what takes place under the hood. By now, everybody has heard the term “AI,” and many people have vivid visions of the whole thing spiraling out of human control, and our species no longer shaping its own destiny. Our book argues that humans will remain very much in control if we take the right policy actions to ensure transparency and fairness in AI designs and operations.

As an example of how to improve transparency, we point to AI designs with millions of lines of code, which quickly become impossible even for top professionals to understand. We recommend that software coders annotate the code as they develop it, leaving accessible records of pivotal decisions made along the way — so that the rest of us better understand the value choices inculcated in the software. That’s just one of many potential steps for gaining a better understanding of how AI operates.

And here could we also add, alongside standard AI implementation challenges that any society will face, contemporary US society’s historic levels of embittered polarization — as well as its turn towards an institution-devaluing, elite-discrediting populism? With such corrosive cultural forces at play, do we face our own AI implementation challenges at an especially inopportune moment? If so, what broader efforts to restitch our social fabric seem an essential part of a successful and desirable AI buildout?

The US definitely faces dramatic challenges, in part for the reasons you just mentioned. Societies can’t adjust to AI technology in isolation from everything else happening in the economy, culture, and political system. The AI implementation challenges escalate when you have high income inequality, a highly polarized politics, and populist tendencies that make people distrust technical elites. So as we think through how to get a better handle on AI, we have to address failures of governance, breakdowns of public trust, and entrenched inequities. We need to demonstrate that our AI technologies can help us devise solutions to enhance transparency, improve governance, and address societal divides.

Many of those concerns already have manifested themselves in our gig economy. Think of all the Amazon drivers and Uber Eats deliverers right now, facing heightened health risks just to keep their temporary jobs, often with no health benefits attached. COVID certainly has revealed fundamental inequities in our present economic system that should give any policymaker pause. So when we discuss new technologies, we also need to discuss how to reconstitute our social safety net, so that the new workforce patterns enabled by digital technology don’t create even bigger social and economic problems.

All of that points to the need to restitch our social fabric, and ensure everybody gains from these technological advances. If we end up in a situation where 20 percent of society sees huge benefits from new technologies, and 80 percent mostly sees downsides, that would harm all of us in the end.

Similarly, for this book’s geopolitical stakes, how will political power and economic competitiveness increasingly come to depend not just on designing an innovative device or effective algorithm, but on building much more comprehensive physical-cultural-occupational ecosystems conducive to channeling these vastly expanded technological capabilities? And in which sectors might you already see a few warning signs of other societies beginning to outperform us — again perhaps less in particular standout inventions, than in long-term infrastructural, institutional, civilizational strategy?

AI will reshape politics, the economy, and global relations. So when we talk about AI implementation, we also have to think through the broader international consequences. Certain countries stand out for being quite systematic in their approach to AI. China definitely stands out for its AI investments, as do Singapore, South Korea, and others. These countries have honed a sense of where AI can harness their own economy’s and society’s strongest growth potential. They’ve taken significant steps to invest in those areas.

The US, by contrast, tends not to adopt this kind of systematic thinking. We’ve grown accustomed to a more haphazard process. When a company has a good idea, it invests substantial resources in one particular product, and that’s how our technology innovation happens. We tend to think of innovation mainly from the standpoint of commercial or consumer markets — as opposed to focusing on national economic competitiveness or even national security. But America needs to think more broadly and comprehensively about longer-term innovation: where the opportunities will arise, how our whole society will adapt, who among us might find themselves feeling left behind, and which of our global engagements and commitments will need to shift. Answering those more pointed questions will help to determine whether we end up in a good or bad place with AI.

Now for specific sectors, we can start with healthcare. You point to substantial AI-driven gains in diagnostics, prevention, treatment, drug development and clinical trials, cost-effectiveness and fraud monitoring. Combined, these various benefits hopefully will direct us towards a more personalized and patient-centric approach to medicine, with fewer of the bureaucratic impediments. At the same time, you point to existing fissures and inequalities in health outcomes (along lines of race, gender, income, age, geographical location) potentially getting further exacerbated. Could you describe how particular health-policy choices could foster the benefits, while fending off those potential harms?

Let’s start with emerging technologies which can improve healthcare access as well as outcomes. For example, we already see growing use of remote-monitoring tools and wearable devices that track your heart rate, blood pressure, and blood-sugar levels. These devices allow all of that monitoring to take place from your home. Your physician’s office receives these results electronically, and your doctor might detect a problem before you even sense it — and can alert you, and contact you for a more detailed consultation. That’s just one example of subtle but foundational shifts in reorienting our health system towards preventative and proactive care. That change saves significant money, while also improving the quality of care.

But in a more ominous direction, our healthcare system already operates amid grave social inequities. When we discuss new products, devices, and services, we always need to ask: “Who can access these advances?” COVID has made even more concrete the enormous racialized inequalities that African Americans long have experienced, relative to others. By extension, any enthusiasm we feel for new health technologies always should encourage us to push for equitable access to these innovations — and to use technology to reduce disparities based on race, gender, age, income, and geography. Going forward, we need to ensure that such breakthroughs don’t widen inequalities, but instead help to close them.

We’ve already seen AI healthcare applications end up intensifying biases or unequal outcomes. Thankfully, we’ve also developed AI applications to diminish bias. Third-party audits of projected impacts now can alert us to these concerns before things really start moving in a negative direction. This also might mean first launching pilot projects on a local basis, or monitoring impacts across different demographic groups. That can give us a broader sense of the societal ramifications before they get magnified. All of this can play a tremendous role in improving people’s lives and longevities.

Within this broader institutional context, how will healthcare providers need to reinvent their own professional practice and their relationships with patients?

Right now, doctors have incentives to bring a lot of people into their offices to take a bunch of tests. That’s how many medical practices make money. That also helps explain why we in the US have such high healthcare costs, while often getting worse results than other societies. So we need to transform these healthcare incentives, to actually keep people out of doctors’ offices and hospitals, the places with the highest costs. Again, remote-monitoring devices can provide diagnostic information, allowing physicians to spot problems like emerging heart arrhythmias. We need various ways to screen patients in a relatively low-cost manner, and to identify people at risk of harmful consequences — and then to devote more of our medical attention to those particular individuals. Along the way, AI technologies can lower costs, improve service delivery, and lead to a healthier population.

Then for a broader social diagnostic, education offers a sphere in which the stakes of AI innovation seem more or less existential for the US, shaping future global stature and competitiveness — and demanding the most inclusive possible harnessing of our creative, scientific, engineering, entrepreneurial, and organizational talents. So could you first describe how a US education system already prone to decentralization, fragmentation, and inequality can in fact benefit from the kinds of personalized learning that AI applications help to streamline and make cost-effective? What can such personalized learning look like, and how can it make us more (rather than less) connected, engaged, and equal?

Personalized learning will allow education systems to build themselves around the important point that various people have very different learning styles. Some people need more repetition, while others master material quickly and can race ahead to higher levels of study. Technology allows us to tailor the learning modes and pacing best suited to each individual.

Through technology, classrooms can become vehicles for personalized learning. Online tutorials often provide the basis for these transformations. Technology actually can transmit certain types of knowledge as well as (or even better than) humans can. That in turn can free up teachers to focus on problem-solving, on creative applications of learning, and on guiding students through how to find information when they don’t know where to look for the answer. These are essential skills today’s students will need throughout their adult lives.

So for education’s own ecosystemic restructuring, could you sketch some corollary changes in reconceiving lifelong engagements to boost Americans’ skillsets, in assessing educational transmission (prioritizing, for instance, student outcomes over instructional inputs), in the training of instructors themselves — all substantially recalibrating “the dynamic of learning versus teaching”? Why might the very term “teacher,” as we’ve understood it, no longer suffice?

Today our society invests in education roughly through age 25. Most Americans get K-through-12 for free, and then at the college level many students qualify for financial aid or federal assistance of various kinds. But moving further into this 21st-century economy powered by AI and emerging technologies, we’ll need to devise lifelong-learning models. What you learned through your high-school or even college education probably will be inadequate five or 10 years after you graduate. Workers will need to acquire significant new skills at age 30, 40, 50, 60, and perhaps beyond.

This might mean private companies providing courses for their employees, or paying for employees to take classes elsewhere. It probably includes various online platforms designed to impart new skills, and certificate programs through which workers can demonstrate competency. But also, as a country, we need to place a much greater premium on workforce development and on-the-job learning. The nature of our economy, our labor markets, and our individual jobs will change faster and faster — and we’ll need to help Americans keep improving and expanding their skills, so they don’t get left behind.

AI can likewise enhance our transportation sector — particularly through mass introduction of autonomous vehicles improving safety, alleviating congestion, reducing greenhouse gases and pollution. In order to do so, however, AI innovation will need to include significant smart-infrastructure investment, astute policy and legal and regulatory decisions, recalibrated cybersecurity and personal-privacy and insurance provisions, and an overcoming of substantial public doubts. So while popular conversations might fixate on cool designs, corporate brands, and charismatic entrepreneurs, could you explain here especially why harnessing AI requires not just the perfect smart car (or commercial truck, or public bus), but a much more extensive ecosystemic overhaul?

One of the most annoying parts of urban living today is traffic congestion. Many of us spend countless hours every week sitting in our cars or on a bus because of all the congestion. Technology can dramatically reduce this aggravation, source of economic inefficiency, and significant contributor to carbon emissions.

As the technology improves, people will switch to autonomous vehicles. But like you said, we also need to invest in smart infrastructure, such as dynamic traffic signals. Right now, traffic signals operate in a static fashion. They are preset to switch from green to red every 30 or 45 or 60 seconds — regardless of the traffic flow. A huge inefficiency emerges from this basic fact, and we can address that through technology. Dynamic traffic signals can process data from embedded road sensors, and determine when the light should change on 24- or 36-second (rather than 60-second) intervals, in order to improve the traffic flow.

In many ways, today’s technology can help solve longstanding transportation problems inherited from previous technologies. Autonomous vehicles can reduce the stress in people’s lives, reduce pollution, and also produce much safer road conditions. Something like 90 percent of fatal driving accidents involve human error (due to alcohol use, distraction, or some other human-specific problem). Autonomous vehicles will not completely eradicate harmful accidents, yet they won’t drive drunk or check their text messages. They’ll harm far fewer people than human drivers do today. But we’ll need significant investments in redesigning our cities and transportation networks in order to gain these benefits.

America’s distinct education and transportation challenges also raise acute variations on a question that came up for me throughout this book, in terms of if and how a reimagined US federalism can help to improve (rather than to complicate or impede or distort) longer-term AI developments. So alongside the vexing categorical questions of who should make which crucial decisions on our AI buildout (software designers? business leaders? elected officials? a public citizenry? informed consumers?), could you address this complementary question of how top-down or bottom-up of a process you foresee in various sectors?

We need to reimagine federalism, and transportation provides an excellent example. Right now, many of the regulations affecting cars and trucks (or licensing drivers, or setting speed limits) take place at the state or local level. National regulations primarily focus on human safety, and designing safer cars. But autonomous vehicles will require more continuity for transportation regulations across the 50 states. Manufacturers cannot build one car model to meet Montana’s requirements and a different model for Illinois.

As you suggested, the entire built environment around car transportation likewise will need an increased degree of standardization. So here again, we can’t just think of technology innovation as a technical matter. Technological advance will demand rethinking our whole system of governance. We’ll need to ask: “Who should decide on these particular questions? At which levels of government? With what kind of coordination across agencies and jurisdictions?” Our current federal system no longer feels optimized for technology innovation. And beyond just complaining about this situation, we need to re-envision how American governance can harness technological benefits for its people.

Along related lines, e-commerce provides perhaps the most striking manifestations to date of how AI shapes economic competition and economic concentration — with the US facing both domestic challenges posed by a handful of tech companies’ market dominance, and geopolitical challenges posed by sizing up against Chinese markets’ economies of scale. So between the desire to scale down monopolistic concentration within the US, and to scale up our own commercial and data-analytic capacities relative to international rivals’, which e-commerce policy discussions seem most salient?

Even before COVID, we saw a rising shift towards e-commerce. COVID of course has then accelerated this trend, with decisive economic consequences. Think of how much American shopping has moved online. Think of all the stores that have closed and probably won’t come back — or all the customers who will remain wary of shopping in brick-and-mortar establishments for a long time to come, due to health concerns. Think of the many scalability and distribution advantages, moving ever more of our commercial sector into e-commerce, with leading companies gaining more and more market share. This raises potential problems in terms of competition policy and the future of small businesses. We’ve reached the point where we need a national conversation over these issues, so that we can devise significant and appropriate regulatory reform.

Developments in Chinese e-commerce also provide a very interesting angle. China has bet heavily on e-commerce, and is completely comfortable having just a few dominant companies in key sectors. China’s government actually has an easier time tracking developments when they only have to deal with two or three companies, rather than 300. This also can produce obvious AI advantages, in terms of pooling massive streams of synchronized data to analyze and draw lessons from. That gives the US a lot to think about in terms of economies of scale — both for domestic and international competitiveness. We have to make sure we don’t get left behind in the international AI competition.

And again our patchwork governmental/regulatory system has allowed e-commerce to flourish, but now seems to pose potential chokepoints around questions of digital-infrastructure buildout, physical infrastructure and delivery mechanisms, zoning rules, tax policy. So let’s say that, more than for any other sector your book considers, Americans already see enough tangible benefit from e-commerce to push society towards effectively addressing these concerns. Which roles should which various stakeholders play in shaping an e-commerce sector that can work well for employers, employees, localized communities, and sustainable economic growth — all at once?

For the past few decades, private firms have made the most important decisions about the pace and priorities of technological innovation. But now we see many Americans no longer happy with that arrangement. We’re starting to depart from this libertarian stance — of letting companies themselves decide what works best. We’re seeing more public and government engagement on these questions.

For just one example, many readers can probably picture all the side effects from our increasing reliance on e-commerce, with constant delivery trucks crowding the streets in urban neighborhoods, double-parking and slowing up traffic. Alongside the convenience of home delivery, we get these annoyances of our new everyday life. So we’ll need to redesign our cities in response to increased e-commerce. We’ll need to figure out the most efficient and most effective delivery mechanisms. We’ll need to rethink our zoning regulations and our labor laws for the so-called independent contractors driving many of these trucks.

More broadly, we can sense the need for a new social contract for this whole digital economy, which will differ from the implicit and explicit social contract of our industrial economy (with its large concentrations of work in factories and warehouses). Today we need to make sure that all of our gig-economy workers, and all of our independent contractors, can access healthcare and disability-insurance coverage and unemployment protections and retirement planning — whether or not they work full-time for any one single firm.

What then might it look like for technology enthusiasts to “drop the outmoded mantra that regulation destroys innovation,” and to develop more nuanced distinctions: say between vertical regulatory paradigms (for specialized, sensitive sectors), and horizontal regulatory paradigms (widely diffused guiding principles)? How can each of these frameworks push back in its own distinct way against that escalating privatization of societal decision-making over the past few decades?

Historically, when a broad range of Americans start disliking or distrusting developments across an entire sector, government steps in to establish or rethink regulations. Today for our tech industries, and for our broader economy and society, we face questions of when and where we need regulations specific to a single sector — versus which rules should apply across the board in every sector, regardless of their particular applications.

Strengthened privacy protections, for example, could cut across every sector and affect almost every company. At present, we actually have pretty significant privacy rules in place for education and healthcare, but not so much in terms of e-commerce and consumer applications. Privacy probably demands an across-the-board approach, because many similar issues arise for a variety of businesses, and because weaknesses in one sector also make the others more vulnerable.

For other kinds of problems quite specific to healthcare, or education, or transportation, we may need more fine-tuned and precisely targeted regulations. You shouldn’t want to go across the board unless the problems themselves spread across the board. You really need to match the problems and the regulatory action to make sure the action addresses the particular problem.

Well if e-commerce again exemplifies how AI can redistribute the scope and scale of economic power, national-defense applications illustrate how AI can accelerate the speed (both in terms of tech innovation, and of combat itself) of geopolitical rivalry. AI buildout again will mean not just producing faster planes or drones or robots, but coordinating countless sensors, devices, vehicles, technological platforms — and exponentially accelerating adaptive decision-making processes. So could you sketch how these tech-enhanced military capacities will likewise need to call forth new human qualities, by demanding, say: “leaders of character… steeped in the imperative of reducing the relationship of action to decision and…educated in intuitive as opposed to deliberative decision-making”? How will humans have to adapt to stay in control of our war-making technologies?

Warfare will accelerate a wide range of new technologies. We’ve already seen drones, robots, and autonomous-weapons systems start to get utilized. But this also means that governments, militaries, and individual humans will need to adapt to stay in control of these systems. So for example, with drones right now, at least according to stated US policy, a human still has to press the button. The drone itself can’t decide to fire on a particular target. And we’ll need an expanded range of such national-defense guidelines to keep humans in charge.

My coauthor John Allen likes to emphasize that all of these emergent changes in warfare technologies will intensify the need for what he calls leaders of character. We’ll need military decision-makers who can take advantage of data analytics and can quickly absorb a flood of information, but who also possess an instinctive knack for spotting the most relevant development at any given moment. This will no-doubt require elevated technical and analytic training, but also well-honed intuitive human sensibilities.

Of course at the same time, amid prospects for hyperwar, any number of possibilities arise for hacking, feints, or murky gray-war incidents that trigger destructive (perhaps mutually destructive) escalations — especially so long as our technical advances continue to outpace our national and international protocols for engagement. We also need to ensure fluid interoperability across various domains and military units, and with allies. We need to ramp up national-security measures to restrict technology transfers to would-be adversaries. And we need to do all of this without unnecessarily prompting an expanded trade war or a second Cold War. So here the question becomes: alongside a new facility with speed, which most crucial elements of prudence, diplomacy, and deliberative long-game strategizing seem most crucial to US national-security interests in an AI age?

We need to devote significant attention to long-term strategizing across our whole society. High-tech warfare will only get faster and more complex. But that also makes intelligence capabilities and diplomatic relations more crucial than ever. Both in combat and in statecraft much more broadly, leaders will need well-developed capacities for human judgement that help them to integrate information from different perspectives and sources, to deploy new technologies, and to arrive at timely and effective decisions. Emerging technologies will require new skillsets across society, and future governmental leaders on all fronts (military and otherwise) will need to absorb those lessons in order to take advantage of AI.

Within governmental institutions themselves, for one model of what contemporary organizational agility can look like, could you describe how distributed decision-making plays out in exemplary fashion for the National Institute of Standards and Technology? And more generally, how might both public and private organizations benefit from an AI-enhanced redistribution of decision-making authority: freeing up significant human effort presently focused on documenting/monitoring employee behavior — and granting all participants a more nimble, empowering, and dignified sense of agency and responsibility?

The old government model of command and control, and of top-down hierarchical approaches to decision-making, are not very effective, especially in a digital world. The public officials at the top of such hierarchies don’t have a good understanding of digital technologies. Regulatory processes move much slower than the actual pace of innovation. With government always running several steps behind the companies, it can’t do a very effective job of limiting bad corporate behavior.

So in our book, John Allen and I argue that government needs to update its processes, and embrace more distributed and collaborative procedures, drawing on expertise from a variety of sectors. For example, many societal challenges involving AI require knowledge of computer science, ethics, the social sciences, and the humanities. To deal effectively with questions of racial bias, unfair algorithms, or intrusive data sharing, we have to bring together experts from several areas, and have them develop common standards for addressing these fundamental problems.

The National Institute of Standards and Technology provides a good model for that. It regularly brings people together to discuss major technical problems, and make recommendations on how to fix those problems. Its approach addresses pressing concerns in real-time, and comes up with practical and effective solutions. This model can and should be employed widely to deal with AI and emerging technologies.

Controversies over autonomous-weapons systems have generated their own distinct forms of techlash within leading Big Tech firms themselves. And more broadly, for public concerns over how AI can compromise personal privacy, intensify surveillance, reinforce existing inequalities, prompt mass job displacement (not to mention the existential dangers posed by our human species’ precarious status relative to sophisticated machines), where do you see today’s AI skepticism stemming from anxieties over specific applied technologies, and where from more generalized doubts that contemporary private and public institutions have ordinary Americans’ well-being in mind? Or what most concrete policy recommendations might you offer for first convincing most Americans that AI deployment will serve the general good (and not its opposite)?

I consider it a very positive sign that more companies have started hiring ethicists, and implementing AI ethics codes. Some now have internal AI review boards. They train their people to make the most of AI’s upsides, as well as to minimize the downsides of technology innovation.

We need more of these developments in corporate offices, and we also need broader policy changes to address an emerging public techlash. The Edelman Trust Barometer, which recently surveyed over 34,000 people around the world, found that 61 percent consider today’s pace of technological change too fast. And I doubt we’ll actually see that pace of innovation slow down. But we can proactively shape how this all plays out. We’ve seen, for example, a number of local governments start imposing restrictions on law-enforcement’s use of facial-recognition software. We’ve seen significant public concerns about new technological applications throughout the criminal-justice system.

Gig-economy industries are seeing labor regulation that improves working conditions for people driving Ubers and delivering products to our homes. Some cities have started adopting restrictions on Airbnb, to limit how much rental activity can take place in residential buildings and neighborhoods. Several states have recently passed stronger privacy laws. So a new social contract of sorts already has started to emerge at local and state levels, and we probably will see more national action as well.

To close then, could you make the historical case for why we lost the Congressional Office of Technology Assessment precisely when legislators needed it most? And could you flesh out your ideal for an updated “dedicated body that undertakes research, assesses policy and regulatory alternatives, and helps lawmakers come to grips with AI, robotics, facial recognition, and virtual reality, among other developments”?

After the 1994 election, with Republicans recapturing control of the House for the first time in almost 50 years, Newt Gingrich became Speaker, and wanted to downsize government. He picked as one of his targets the US Office of Technology Assessment. Gingrich killed that agency — ironically, it turned out, just at a mid-1990s turning point when the Internet started taking off and the digital revolution unfolded.

Over the past 25 years, Congress definitely could have benefited from OTA’s input, analysis, and advice. Instead, we’ve lacked any clear federal contribution to discussions of digital technology’s upsides and downsides, or of how and when government (which of course funded much basic research and early work building these technologies) should play a role in shaping their ongoing development and impact on society. It was stunningly poor judgment on the part of Gingrich and his fellow legislators.

We clearly could use such an agency today in order to address current problems, and those likely to develop over the next five to 10 years. We need a sustained effort focused on the ethical and societal challenges of AI. If we get these issues right, then the AI future looks bright. But if we fail to make good decisions, developments could spiral out of control rather quickly. That’s why John Allen and I titled this book Turning Point. We’ve reached a major inflection point. Things could go either way. We all need to think carefully about how to make the best choices.