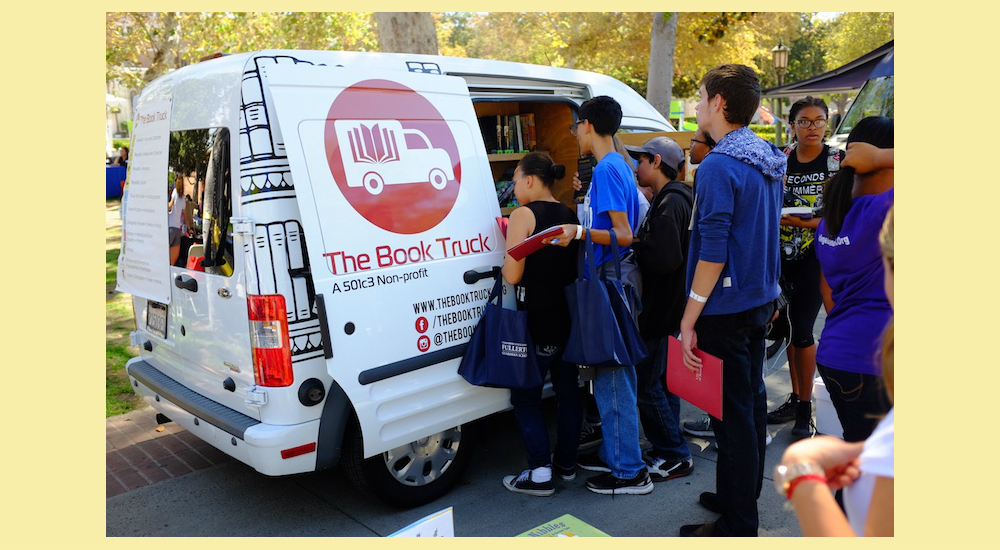

Ruth Ebenstein discusses book deserts, teen literacy, and the work of The Book Truck.

Rescued by Books: Fostering Teen Literacy in Low-Income Communities

Ruth Ebenstein discusses book deserts, teen literacy, and the work of The Book Truck.

Andy Fitch interviews writer and translator Nathanaël.

Elyse Joseph describes the history of African-Americans living in Orange County, and its history as a "Sundown Town."

Jake Fuchs discusses the inspiration behind his new satirical novel, "Welcome, Scholar."

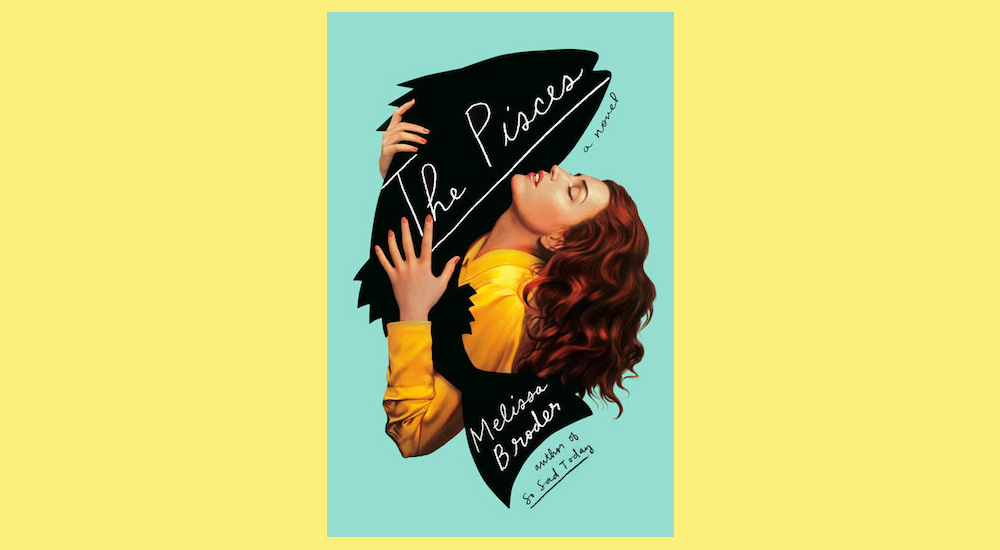

Anna Dorn talks to Melissa Broder about her new novel "The Pisces."

Sara Lipton visits the Museum of the Bible in Washington, D.C.

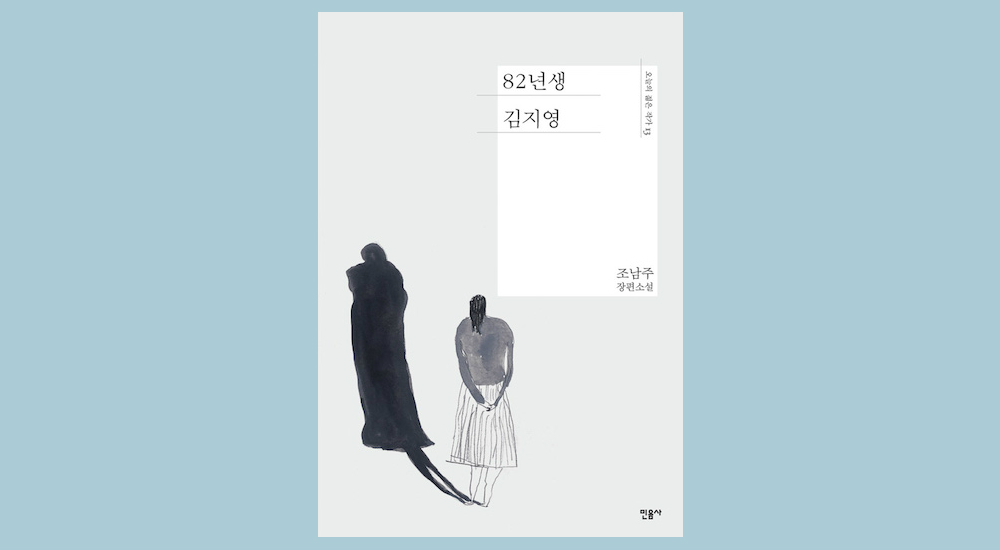

Colin Marshall on last year's best-selling novel "Kim Ji-young Born 1982."

Nathan Scott McNamara interviews John Porcellino, writer and comic artist of "King-Cat" and "From Lone Mountain."

Andy Fitch interviews Jonathan Lear about his recent collection, "Wisdom Won from Illness: Essays in Philosophy and Psychoanalysis."

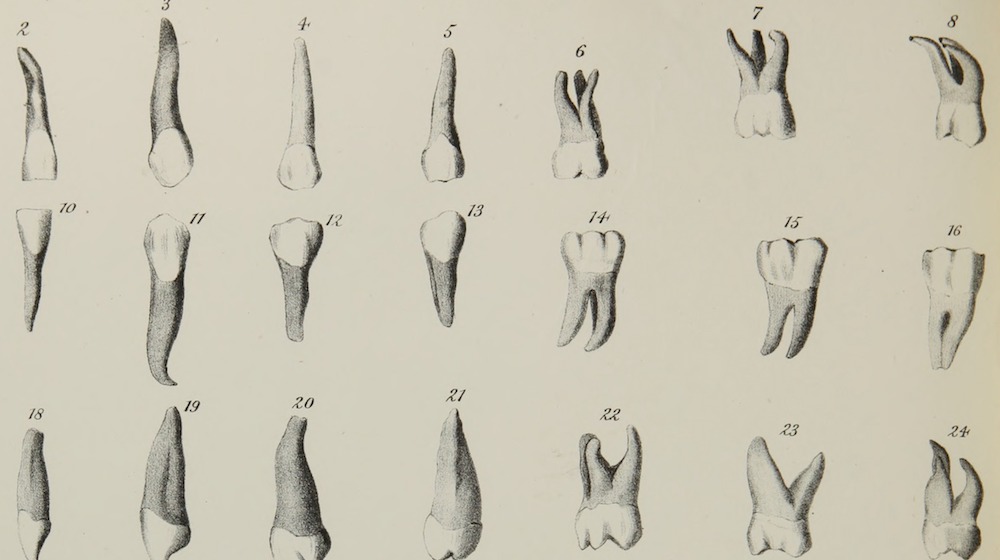

Ani Kokobobo reflects on the genre of "teeth stories," Eastern European dentistry, and her own molar extraction.